In aviation, practical drift is a set of circumstances where actual performance varies from the designed performance as a result of factors that may or may not be under the direct control of the organisation and, which may impact the safety management of the airline. ICAO believes that practical drift is inevitable, primarily due to human factors. A deviation from the norm can be harmonised through good processes, good analysis and good culture.

There are many causes of accidents and serious incidents. Some of these are well understood and documented (e.g. Reason’s Swiss Cheese Model). Often, the final accident is the result of a large number of unrelated, minor issues that have suddenly manifested in an unexpected manner. These minor issues can be thought of as “Latent Issues” that are lurking within the business and often go undetected until a major problems materialises.

If an organisation can conduct meaningful trending on the mundane, there is every likelihood that it will be able to identify and rectify these latent issues before they combine to cause an accident. Clearly there are many areas that could hide latent issues and it is important that they are understood and identified as leading indicators to something more sinister. Latent Issues can be thought of as unseen holes in the usual defences against an accident (Technology, Training and Regulation) and, when combined with the effects of practical drift the results can be catastrophic if left unchecked.

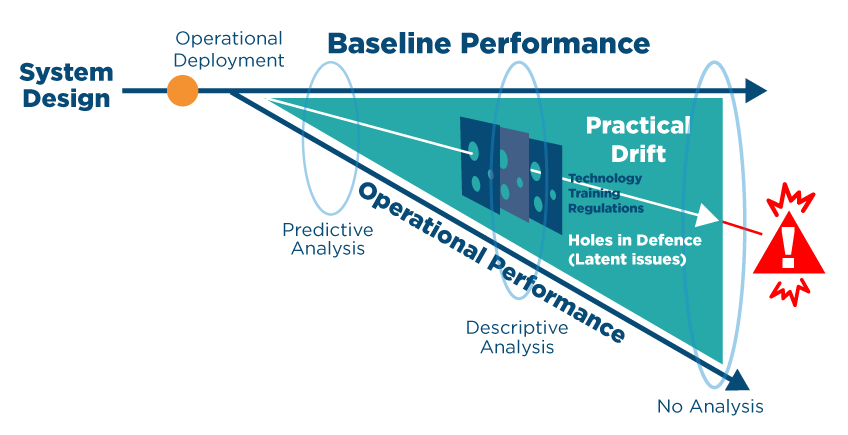

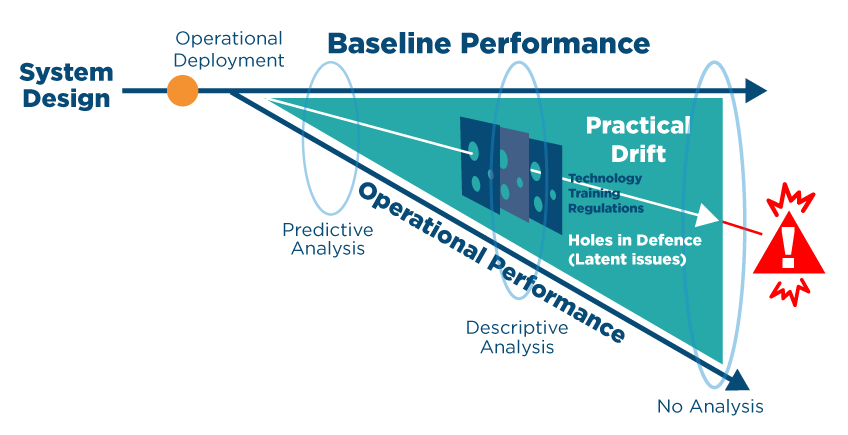

Below are excerpts taken from ICAO Document 9859 that explain the concept of “Practical Drift” and show how, over time, the performance of any system will deteriorate. The original theory was proposed by Scott A. Snook.

If an organisation is cognisant of the theory surrounding practical drift, it can look out for underlying trends by using careful data analysis techniques. In so doing, the business will be able to identify leading indicators to a serious incident and then take appropriate actions to prevent such events from materialising.

One contributor to practical drift that is not discussed in detail (by ICAO) is that of complacency. All organisations must guard against this as it is often a major cause of a deterioration in safety performance. Think of how often you might check your tyre pressures before embarking on a long car journey. Probably not that often because they are always ok (or are they?).

Tasks, procedures, and equipment are often initially designed and planned in a theoretical environment, under ideal conditions, with an implicit assumption that nearly everything can be predicted and controlled, and where everything functions as expected. This is usually based on three fundamental assumptions:

- Technology needed to achieve the system production goals is available;

- Personnel are trained, competent and motivated to properly operate the technology as intended;

and,

- Policy and procedures will dictate system and human behaviour.

These assumptions underlie the baseline (or ideal) system performance, which can be graphically presented as a straight line from the start of operational deployment as shown in the figure below:

Once operationally deployed, the system should ideally perform as designed, following baseline performance (blue line) most of the time. In reality, the operational performance often differs from the assumed baseline performance as a consequence of real-life operations in a complex, ever-changing and usually demanding environment (red line). Since the drift is a consequence of daily practice, it is referred to as a “practical drift”. The term “drift” is used in this context as the gradual departure from an intended course due to external influences.

Snook contests that practical drift is inevitable in any system, no matter how careful and well thought out its design. Some of the reasons for the practical drift include:

- Technology that does not operate as predicted;

- Procedures that cannot be executed as planned under certain operational conditions;

- Changes to the system, including the additional components;

- Interactions with other systems;

- Safety culture;

- Adequacy (or inadequacy) of resources (e.g. support equipment);

- Learning from successes and failures to improve the operations, and so forth;

- Complacency in the workforce.

In reality, people will generally make the system work on a daily basis despite the system’s shortcomings, applying local adaptations (or workarounds) and personal strategies. These workarounds may bypass the protection of existing safety risk controls and defences.

Safety assurance activities such as audits, observations and monitoring of SPIs can help to expose activities that are “practically drifting”. Analysing the safety information to find out why the drift is happening helps to mitigate the safety risks. The closer to the beginning of the operational deployment that practical drift is identified, the easier it is for the organisation to intervene. This is dependent on the level and efficacy of the types of data analysis used.

Download our free insight ‘ The Essential Guide to Aviation Safety Management Systems’ now to learn more.

Photo by L.Filipe C.Sousa on Unsplash